Description:

Transferring of data between storage types, formats, or computer systems is termed as Data migration CSE final year project , it is usually performed programmatically to achieve an automated migration, freeing up human resources from tedious tasks. When organizations or individuals change computer systems or upgrade to new systems, or when systems merge, this can be put to potential use.

To achieve an effective data migration procedure, the data on the old system is mapped to the new system providing a design for data extraction and data loading. The design relates old data formats to the new system’s formats and requirements. Programmatic data migration may involve many phases but it minimally includes data extraction where data is read from the old system and data loading where data is written to the new system.

If a decision has been made to provide a set input file specification for loading data onto the target system, this allows a pre-load ‘data validation’ step to be put in place, interrupting the standard ETL process. Such a data validation process can be designed to interrogate the data to be transferred, to ensure that it meets the predefined criteria of the target environment, and the input file specification. Data migration phases (design, extraction, cleansing, load, verification) for applications of moderate to high complexity are commonly repeated several times before the new system is deployed.

Similarly, Data Compression is useful because it helps to reduce the consumption of expensive resources, such as hard disk space or transmission bandwidth. On the downside, compressed data must be decompressed to be used, and this extra processing may be detrimental to some applications. For instance, a compression scheme for video may require expensive hardware for the video to be decompressed fast enough to be viewed as it is being decompressed.

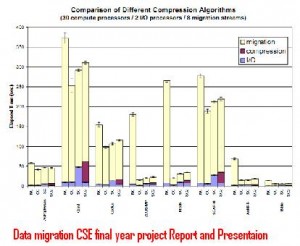

The design of data compression schemes therefore involves trade-offs among various factors, including the degree of compression, the amount of distortion introduced, and the computational resources required to compress and uncompress the data.

Data Compression:

There are two kinds of data compression Lossy and Lossless. Generic, data-specific, and parallel compression algorithms can improve file I/O performance and apparent Internet bandwidth .However, when integrating compression with long-distance transport of data from today’s parallel simulations.

Issues:

These issues include: what kind of compression ratios can we expect? Will they fluctuate over the lifetime of the application? If so, how should we make the decision whether to compress? Will the compression ratios differ with the degree of parallelism? If so, how can we handle the resulting load imbalance during migration? Are special compression algorithms needed? Can we exploit the time-series nature of simulation snapshots and checkpoints, to further improve compression performance? What kind of performance can we expect on today’s supercomputers and internet?

download Project Report of CSE Data migration CSE final year project.

CAN U GIVE ME THE DETAILS OF HOW TO IMPLEMENT “TO MITIGATE DENIAL OF SERVICE ATTACKS IN DISTRIBUTED SYSTEM”