Surveillance is an integral part of security. The main objective of the Video Surveillance system IoT project is to build an effective system that can be used across different domains and technologies. The system is used to Detect Human intervention and breach in personal or commercial property of the user in real-time using AI and IoT.

It helps users to secure their property with the help of advanced artificial intelligence. The resulting system is fast and accurate, thus helping users with more secure surveillance systems.

For the most part, the job entails looking out for something undesirable to happen. The application is to have a system that provides real-time monitoring and alert security when a human is detected in a user’s property in their absence.

OBJECTIVES:

The main objective is to build an effective Video Surveillance System that can be used across different domains and technologies. The system is used to detect people trying to breach security in the personal or commercial property of the user in real time and send a message along with a short video clip to the user.

PURPOSE OF EXISTING SYSTEM:

Currently, the existing Rocker Bogie Suspension Systems Project or surveillance robot for defense Surveillance systems can keep video recordings of homes, offices, banks, and so on. But that is useful only after an incident or robbery happens. No Real-time Updates are provided when there is a breach in real-time.

Just imagine, You’re at your home and someone breaks security and stole money or goods from your office or property. Or consider you’re out of town for some days and there is a robbery at your home. So after you come back home or someone identifies it after some time and they will update you about the breach at your place.

You can take any action after a breach has been done, not at the time it is being done. That issue will be resolved in our system with real-time monitoring and updates.

SCOPE OF SYSTEM:

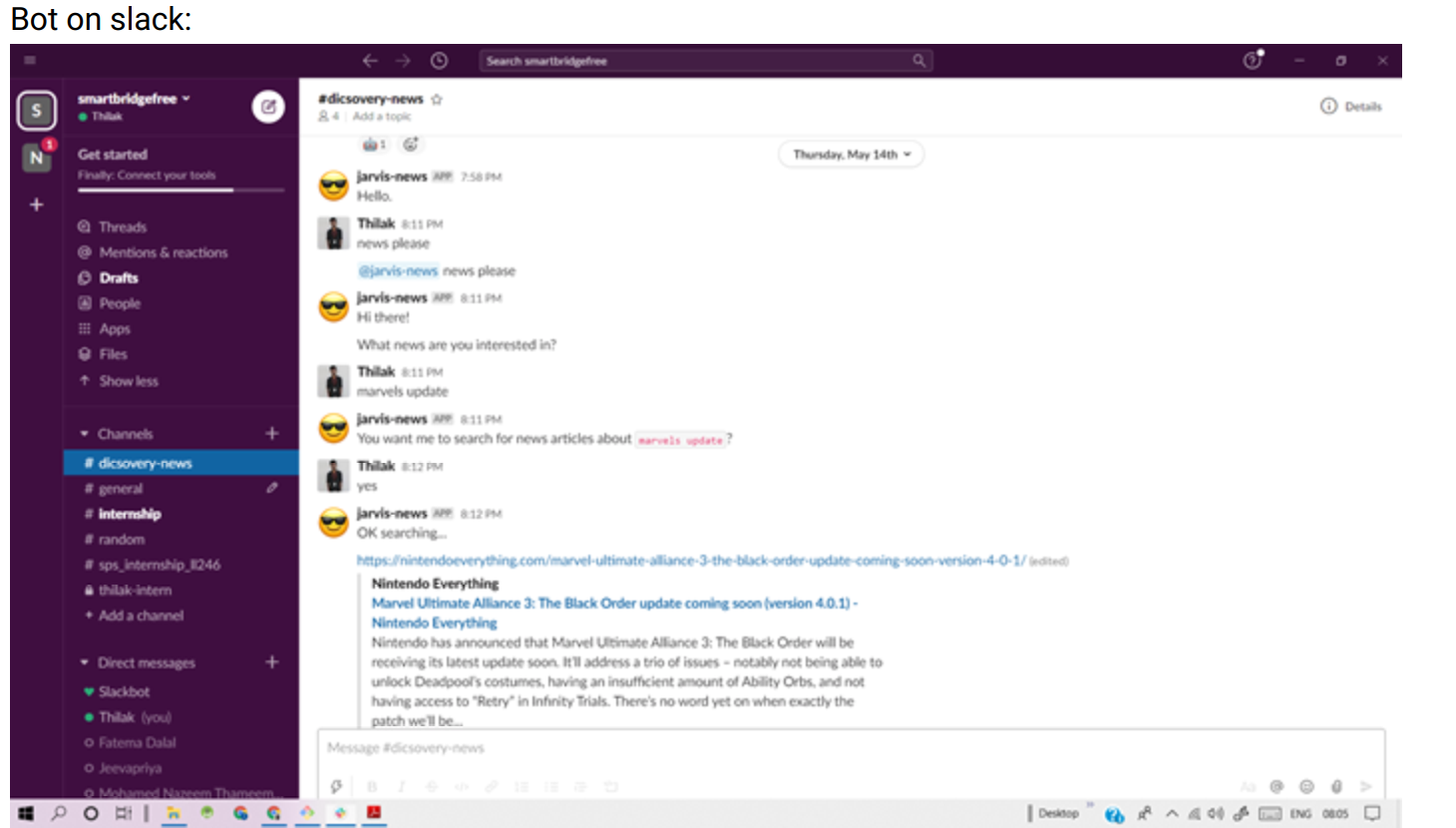

The Video Surveillance System can be implemented in any residential, Industrial, or commercial property. The system recommends detecting any human intervention on the user’s property and sends a notification along with a threshold of a 10-second video clip immediately as soon as it detects humans.

PROBLEM DEFINITION:

This Video Surveillance System project aims to develop an advanced Surveillance system that can keep on monitoring homes, offices, banks, and so on. With the help of this, you can find out if anyone breaches your security in your absence. We have to simply integrate our system into users existing surveillance systems.

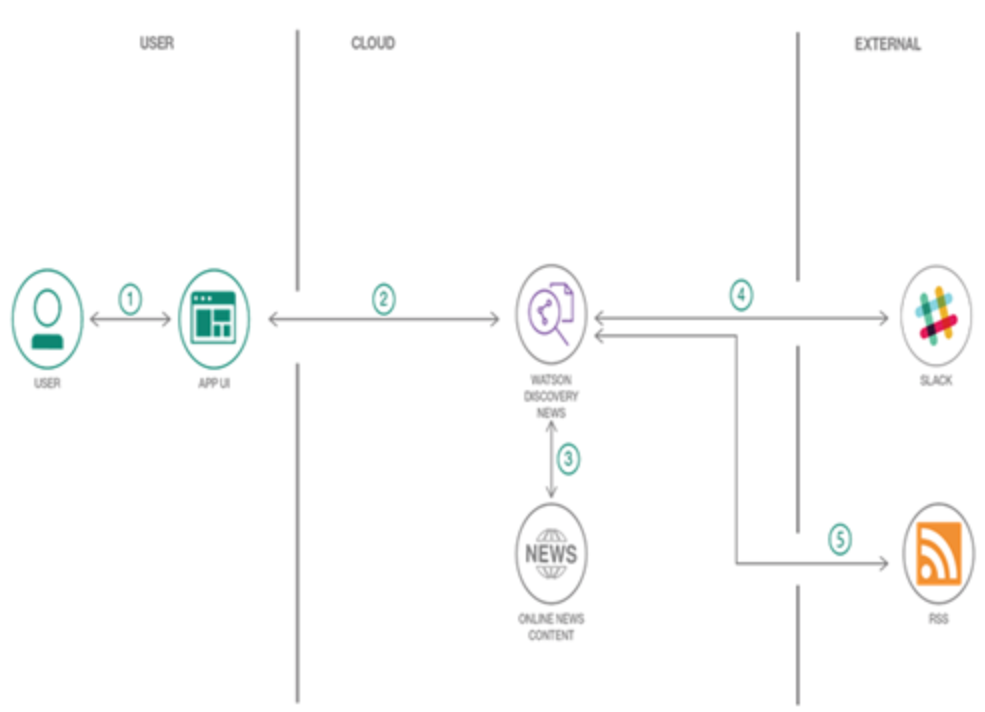

Module specification:

- Raspberry pi

- Camera

- Server

- SNS

- S3

Need Of Modules:

- Raspberry pi as a Client to send frames to the server.

- Camera to capture live video streams.

- Server for processing frames and detecting humans.

- SNS sends a multimedia message to a user when someone tries to breach security.

- S3 to store a short video clip of the breach and send it to the user.

Non-Functional requirement.

EFFICIENCY REQUIREMENT :

When AI is taking care of your property then customers can relax and not have to worry about their security.

RELIABILITY REQUIREMENT :

The system should provide a reliable environment for both the client and the server.

USABILITY REQUIREMENT :

The system is designed for a secure environment and ease of use.

IMPLEMENTATION REQUIREMENT :

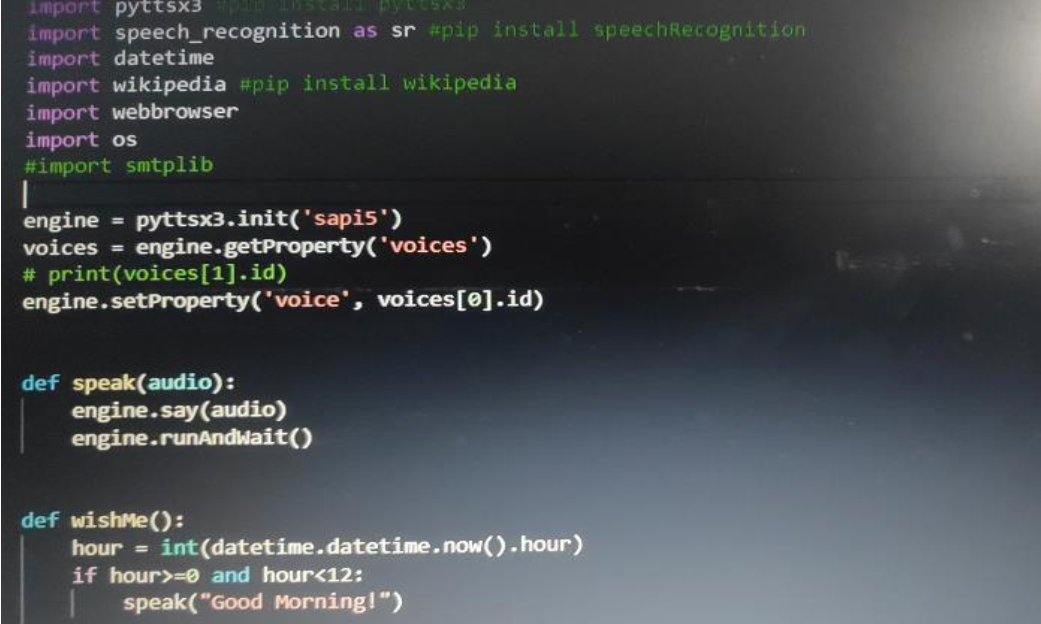

Implementation of the system with pi, night vision camera, python, machine learning, and AI.

DELIVERY REQUIREMENT

The whole system is expected to be delivered in four months of time with a weekly evaluation by the project guide.

Limitations of the System:-

False Positives

Due to different light variant conditions and camera resolutions, sometimes the system detects humans as false when there is none but that can be neglected if there is a human and the system doesn’t detect it then there should be a problem.

Limited Processing Power

As we are using a microcontroller to send feeds to the server, it cannot handle multiple feeds at once and will be slower as the device increases.

Download Automatic Video Surveillance Management System Project Python Code, Documentation & report, Paper Presentation PPT