Introduction

Most of the major IT corporations are leveraging online coding competitions to judge the pressure handling and fundamentals of upcoming software engineers. This has led to a significant increase in the number of online judges and coding competitions. Most of the students are now confused, about which platform they should opt for and how to approach these coding contests on time, every time. This is where the Competitive Programming Platform comes into the picture.

A competitive Programming Platform is a collection of extensions, APIs, bots, and web apps aimed to simplify competitive programming. With this project, students can observe, compare, shortlist and outperform these online judges and compare the improvements and achievements with their peers in a healthy environment. Technologies that we’ll be using in this Competitive Programming Platform project will be Python, Javascript, Node, Flask, Selenium, VueJS, and Tailwind.

Objectives

The main objective is to create a platform on which students can easily select and prepare for online coding competitions in the best possible way.

The key objectives of the Competitive Programming Platform are:

1. Looking at all the competitive profiles at a glance.

2. Get updates about the latest programming contests.

3. Getting all the updates through an email newsletter and push notification.

4. Fetching global and local leadership.

5. VS code extension to speed up local development.

6. Chrome extension to view upcoming contests on the go.

7. Standalone REST API.

Methodology

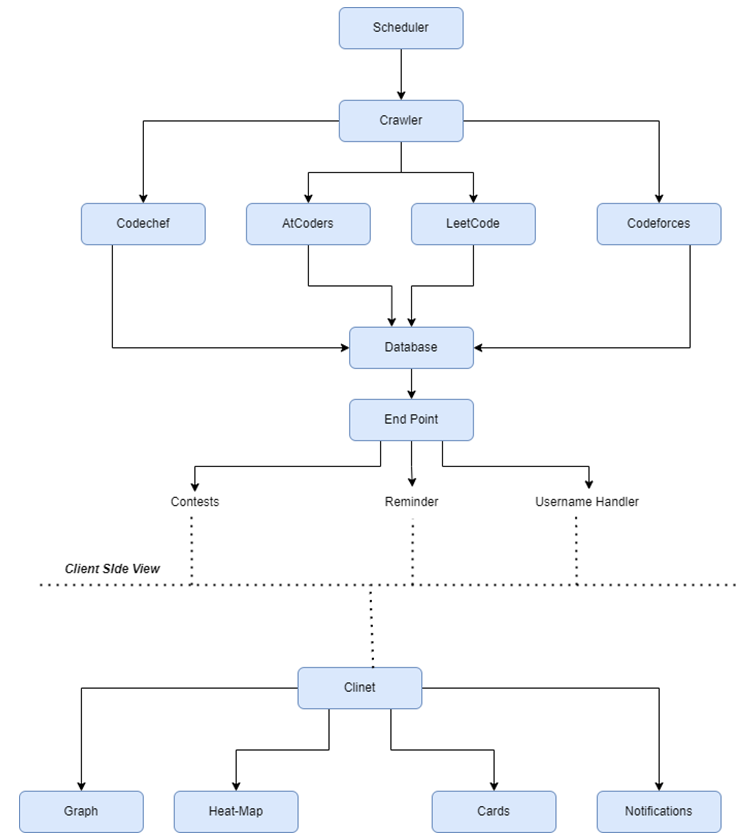

In the first step, we will scrape the data from various resources using a crawler built in Python with Selenium. We will store this data in our database and create a pipeline with a cronjob every six hours.

Now we will deliver all the extracted data through our SPA using VueJS. We will use Workbox 6.0 to convert our SPA into a Progressive Web Application and natively support push notifications.

Web Scrapping: Web scraping is an automatic method to obtain large amounts of data from websites.

Cronjobs in recurrent pipelines: A cron job is normally used to schedule a job that is executed periodically. In our case, we use a cronjob to run our python script that will fetch, and extract unstructured HTML data, validate it and save it in our required database.

User interfaces: Building user-friendly interfaces that bring meaning to our extracted data and visualize it through various tables, charts, and graphs.

Work Flow

Facilities required

• Vue, Tailwind, ChartJS, Babel, GSAP, Node

• Flask, Postgre, Selenium, Python

• Git, GitHub, CodeQL, VS Code

• NGINX, PM2, Travis, Certbot

Expected Outcome

• Responsive, minimalistic user interface with a clutter-free user experience.

• Powerful REST API that can power other third-party applications.

• Healthy competitive environment with ’ friendly competition’ among peers and making competitive programming a constructive habit.