| Sr No |

Project Title |

| 1 |

Smart garbage bin municipal operations. |

| 2 |

Agri-bot: Agriculture robot. |

| 3 |

Swatch Bharat – washroom cleaning robot |

| 4 |

Smart trolly for easy billing using a raspberry pi and IOT. |

| 5 |

Industry Product sorting machine with weight identification over the conveyor belt. |

| 6 |

Advance surveillance robot using a raspberry pi. |

| 7 |

Intruder alert system: IOT based home security with E-mail and photo using a raspberry pi. |

| 8 |

Web application based home automation using a raspberry pi. |

| 9 |

Smart electronic wireless notice board display over IOT. |

| 10 |

Vehicle security system with engine locking and alerting over GSM. |

| 11 |

Smart toll plaza with RFID based door opening system and balance alerting over the message. |

| 12 |

Smart Medicine pill box: Remainder with voice and display for emergency patients. Video |

| 13 |

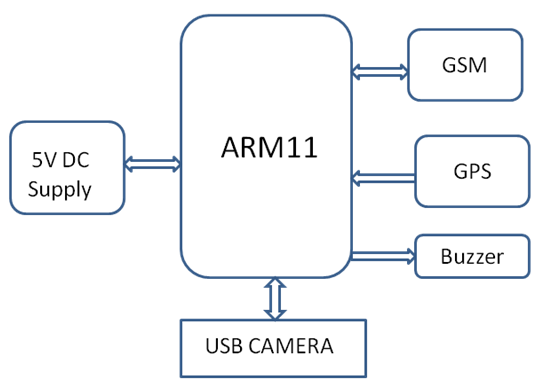

Accident identification based alerting location over GSM and GPS. |

| 14 |

Railway Track Pedestrian Crossing between Platforms (Movable and flexible railway platform). |

| 15 |

Greenhouse monitoring and Weather station monitoring over IOT |

| 16 |

Smart assistive device for deaf and dumb people. |

| 17 |

Semi-autonomous Garbage collecting robot. |

| 18 |

Solar power based grass cutting and pesticides praying robot. |

| 19 |

Automatic movable road divider with traffic detection and posting data to IOT |

| 20 |

Automatic car washing and drying machine with conveyor support. |

| 21 |

Real-time voice automation system for the college department. |

| 22 |

Patient health monitoring over IOT |

| 23 |

Automatic gear shifting mechanism for two-wheelers. |

| 24 |

A Smart Helmet For Air Quality And Hazardous Event Detection For The Mining Industry over wireless Zigbee |

| 25 |

Semi-autonomous firefighting robot with android app control. |

| 26 |

Smart Wearables: Emergency alerting with location over GSM for women’s. |

| 27 |

Smart movable travel luggage bag. |

| 28 |

Building smart cities: Automatic gas cylinder booking over GSM with user alert. |

| 29 |

Automation of dry-wet dust collection to support Swachh Bharat Abhiyaan and monitoring over the internet of things (IoT) |

| 30 |

Smart street lights and fault location monitoring in the cloud over IoT |

| 31 |

GSM based notice display with voice announcements. |

| 32 |

IOT based smart irrigation system |

| 33 |

Smart cities: Traffic data monitoring over IoT for easy transportation/alternative route selection |

| 34 |

Dam gate level monitoring for water resource analysis and dam gate control over IoT |

| 35 |

Public water meter units monitoring and controlling water valve over IoT |

| 36 |

Density-based traffic light system with auto traffic signal transition for VIP vehicles/ambulance |

| 37 |

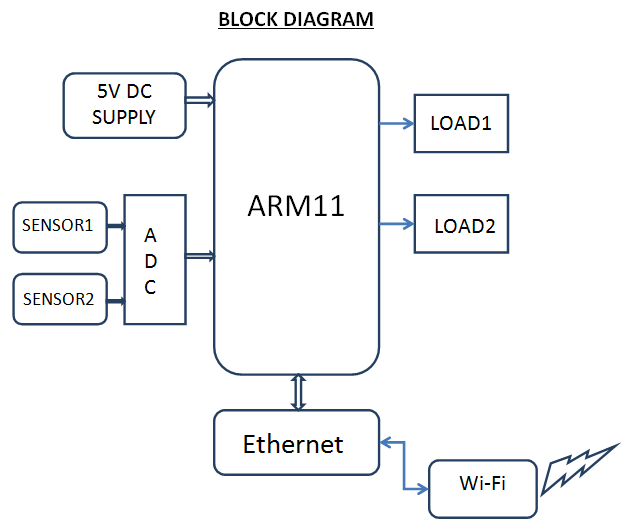

Wi-Fi based Wireless Device control for Industrial Automation Using Arduino |

| 38 |

Ghat road opposite the vehicle alerting system with sound and vibration over the android application. |

| 39 |

RFID based student attendance recording and monitoring over IOT |

| 40 |

Smart colony: RFID based gate security system, street lights, and water pump automation. |

| 41 |

Water Boat for surveillance and video and audio |

| 42 |

Smart rain analyzer with GSM and a loud siren. |

| 43 |

Smart bike. |

| 44 |

Smart wheelchair with android application control. |

| 45 |

Home automation over WiFi using Android application. |

| 46 |

Intelligent blind stick with SMS alert |

| 47 |

Student performance enquiry system using GSM. |

| 48 |

Automatic plant watering and tank water level alert system |

| 49 |

Swacch Bharat mission robot: Smart Mosquito trope |

| 50 |

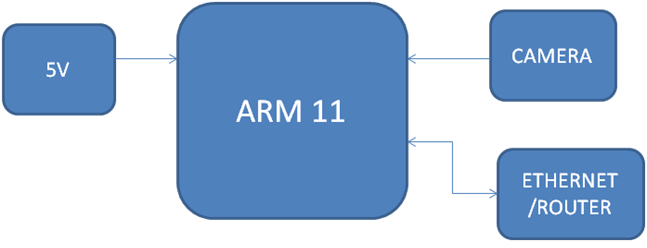

Dual axis solar tracking robot for surveillance with IP camera |

| 51 |

Rocker-bogie suspension system |

| 52 |

GSM protocol Integrated Energy Management System |

| 53 |

Home automation using Android app |

| 54 |

Merrain navigation robot |

| 55 |

Drainage pipe cleaning robot |

| 56 |

RFID and fingerprint-based library automation |

| 57 |

Classroom automation using Android app |

| 58 |

Intelligent car parking using robotics technic |

| 59 |

Agriculture automation using GSM (soil moisture level control and motor control) |

| 60 |

solar based grass cutting robot by obstacle avoiding and path planning |

| 61 |

Meriane navigation robot |

| 62 |

Glass cleaning robot |

| 63 |

Hotel Automation using line tracking robot and android app |

| 64 |

Advanced Supervisory Security Management System For ATM’s Using GSM& MEMS |

| 65 |

Touch Panel Based Industrial Device Management System For Handicapped Persons |

| 66 |

Modern public garden |

| 67 |

Hotel automation for ordering and billing |

| 68 |

Advanced parking system showing nearest availability for parking your vehicle |

| 69 |

College bus information to students |

| 70 |

Automatic efficient street light controller using RTC and LDR |

| 71 |

Head movement controlled car driving system to assist the physically challenged |

| 72 |

Patient health monitoring (heartbeat and body temperature) with doctor alert reporting over the internet of things (IoT) |

| 73 |

Breath and Lungs health analyzer with Respiratory analyzer over the internet of things (IoT) |

| 74 |

Movable road divider for organized vehicular traffic control with monitoring over the internet of things (IoT) |

| 75 |

Automation of dry-wet dust collection to support Swachh Bharat Abhiyaan and monitoring over the internet of things (IoT) |

| 76 |

Automatic movable railway platform with train arrival detection and monitoring over the internet of things (IoT) |

| 77 |

Automatic plant irrigation system with dry/wet soil sense and controlling 230V |

| 78 |

water pump for agricultural applications and monitoring over the internet of things (IoT) |

| 79 |

Weather station monitoring over the internet of things (IoT) |

| 80 |

Arduino Based Foot step power generation system for rural energy application to run AC and DC loads |

| 81 |

RFID security access control system Using Arduino |

| 82 |

RFID based banking system for secured transaction |

| 83 |

Construction of Central Control Unit for Irrigation water pumps. A cost-effective method to control entire villager’s water pumps with user level authentication |

| 84 |

RFID based Library Automation System using AT89S52 |

| 85 |

Prepaid coffee dispenser with biometric recharge using fingerprint |

| 86 |

Automatic Vehicle Accident Detection and Messaging System using GSM and GPS |

| 87 |

Blind Person Navigation system using GPS & Voice announcement |

| 88 |

Hand gesture based wheelchair movement control for the disabled using (Micro Electro Mechanical Sensor) MEMS technology |

| 89 |

Fish culture automation with auto pumping, temperature control, and food feeding |

| 90 |

bomb disposable robot with Infrared remote control |

| 91 |

Defense robot with CAM and GSM for high-risk rescue operations |

| 92 |

solar based defense cleaning robot with autonomous charging and navigation |

| 93 |

Automatic ambulance rescue system using GPS |

| 94 |

Bi-pedal humanoid for military operations |

| 95 |

Advanced college electronics automation using raspberry pi (IOT) |

| 96 |

Solar based garbage cleaning robot with time slot arrangement |

| 97 |

solar based robot for garden grass cutting and watering the plants. |

| 98 |

Under pipe traveling robot to detect Gas line leakage and RFID address navigation to cloud over IoT |

| 99 |

Loco pilot operated unmanned railway gate control to prevent accidents over the Internet of Things |

| 100 |

Smart street lights and fault location monitoring in the cloud over IoT |

| 101 |

Patient health monitoring (heartbeat and body temperature) with doctor alert reporting over the internet of things (IoT) |

| 102 |

Breath and Lungs health analyzer with Respiratory analyzer over IoT |

| 103 |

Non-invasive Blood Pressure Remote Monitoring over the internet of things (IoT) |

| 104 |

Building smart cities: Automatic gas cylinder booking over IoT |

| 105 |

IoT solutions for smart cities: Garbage dustbin management system and reporting to municipal authorities over IoT |

| 106 |

Public water supply grid monitoring to avoid tampering & waterman fraud using the Internet of Things |

| 107 |

Movable road divider for organized vehicular traffic control with monitoring over the internet of things (IoT) |

| 108 |

Automation of dry-wet dust collection to support Swachh Bharat Abhiyaan and monitoring over the internet of things (IoT) |

| 109 |

RFID based pesticides dosing with automatic plant irrigation for agriculture applications |

| 110 |

Construction of Central Control Unit for Irrigation water pumps. A cost-effective method to control entire villager’s water pumps with user level authentication |

| 111 |

Direction control of photovoltaic panel according to the sun direction for maximum power tracking (Model Sunflower) |

| 112 |

Photovoltaic E-Uniform with Peltier plate thermo cool technology for soldiers who work at extremely high temperature or extremely low temperature to warm up or cool down body temperature |

| 113 |

Agricultural solar fence security with automatic irrigation and voice announcement alert on PIR live human detection |

| 114 |

Aerodynamic windmill with reverse charge protection for rural power generation applications with battery voltage analyser |

| 115 |

Footstep power generation system for rural energy application |

| 116 |

Automatic movable railway platform with train arrival detection |

| 117 |

Intelligent car wash system with infrared proximity sensor triggered conveyor belt arrangement using AT89S52 MCU |

| 118 |

Super sensitive Industrial Security System with Auto dialer & 60dB loud siren using AT89S52 MCU |

| 119 |

Gesture Controlled Speaking Micro Controller for the deaf & dumb |

| 120 |

Density-based traffic light system with auto traffic signal transition for VIP vehicles / ambulance |

| 121 |

Wireless Speech Recognition enabled smart home automation for the blind and physically challenged |

| 122 |

Under pipe travelling robot to detect Gas line leakage and RFID address navigation to cloud over IoT |

| 123 |

Dam gate level monitoring for water resource analysis and dam gate control over IoT |

| 124 |

Programmable temperature monitor and controller for industrial boilers & ovens with |

| 125 |

live monitoring in cloud over internet of things |

| 126 |

Automatic Room Light control with Visitor Counting for power saving applications and |

| 127 |

monitoring in cloud over internet of things |

| 128 |

Patient health monitoring in cloud (heartbeat and body temperature) with doctor |

| 129 |

alert reporting over internet of things” |

| 130 |

Breath and Lungs health analyzer with Respiratory analyzer over internet of things (IoT) |

| 131 |

Parking slot availability check and booking system over IoT |

| 132 |

Smart cities: Traffic data monitoring over IoT for easy transportation / alternative route selection |

| 133 |

Tollgate traffic monitor and analyzer using IoT |

| 134 |

Battery Powered Heating and Cooling Suit |

| 135 |

Energy management in an automated Solar powered irrigation |

| 136 |

Smart home energy management system including renewable energy based on ZigBee |

| 137 |

Development of a Cell Phone-Based Vehicle Remote Control System |

| 138 |

Passenger BUS Alert System for Easy Navigation of Blind |

| 139 |

Remote-Control System of High Efficiency and Intelligent Street Lighting Using a ZigBee Network of Devices and Sensors |

| 140 |

Microcontroller managed module for automatic ventilation of vehicle interior |

| 141 |

Wind speed measurement and alert system for tunnel fire safety |

| 142 |

Traffic Sign Recognition for autonomous driving robot |

| 143 |

Android-based home automation with fan speed control |

| 144 |

Android-based password based door lock system for industrial applications |

| 145 |

Android-based electronic notice board (16X2 LCD) |

| 146 |

Android-based digital heartbeat rate and temperature monitoring system |

| 147 |

Android-based Robotic Arm with Base Rotation, Elbow and Wrist Motion with a Functional Gripper |

| 148 |

Android-based hand gesture based robot control using (mobile inbuilt Micro Electro Mechanical Sensor) MEMS technology |

| 149 |

Android-based multi Terrain Robot to travel on water surface, Indoor and outdoor uneven surfaces for defense applications |

| 150 |

RFID based Airport Luggage security scanning system Using Arduino |

| 151 |

Arduino Based Automatic Temperature based Fan Speed Controller |

| 152 |

Bluetooth based Wireless Device control for Industrial Automation Using Arduino |

| 153 |

Design and implementation of maximum power tracking system by automatic control of solar panel direction according to the sun direction (Model Sunflower) Using Arduino |

| 154 |

Implementation of solar water pump control with four different time slots for power saving applications Using Arduino |

| 155 |

Arduino Based Foot step power generation system for rural energy application to run AC and DC loads |

| 156 |

Solar highway lighting system with auto turn off on daytime with LCD display using Arduino |

| 157 |

Bluetooth based Robot Control for Metal Detection Applications Using Arduino |