The upsurge of this disease is CORONA VIRUS has created a life-and-death situation in the world of the living. The virus is increasing day by day and effective lives. Machine Learning can be established very effectively in tracing the disease predicting its growth and forming an effective strategy in order to manage the effect of the virus. This report gives us a full glance and the best mathematical computation with modeling for predicting growth.

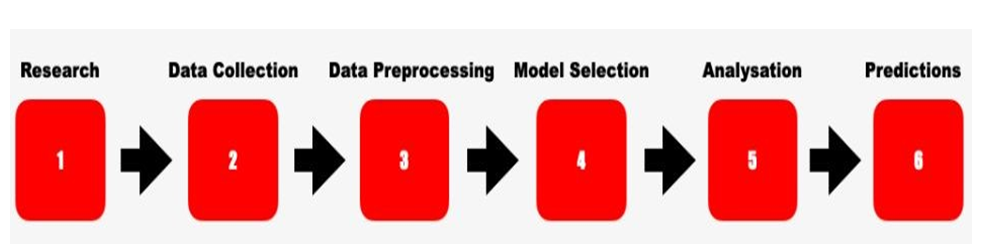

In an Corona Virus Prediction ML-based project, we come up with various computations and modeling to suspect or predict the growth of a particular dataset. Although this concept can be used on a dynamic dataset that is changing day to day, here in this report we will study a particular dataset.

Working on the dataset led to various challenges such as modeling different algorithms of machine learning but finally worked on them in order to get the best result. This report is an insight into the working brief of the project such as descriptive information about machine learning, algorithms, statistical description, and most important the programming language used here which is python.

INTRODUCTION

This deadly disease is caused by the spread of various germs and harmful bacteria(pathogens) which transmits from one human to many humans, from one animal to many, and from animal to human. Early diagnoses are curable, while the patients suffering from it with a maximum number of days are not 100% curable.

There is a need for innovation in predicting the growth with deep thorough analysis, of huge global data on the rise of the virus.

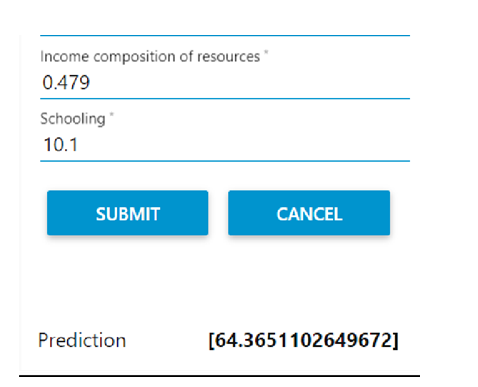

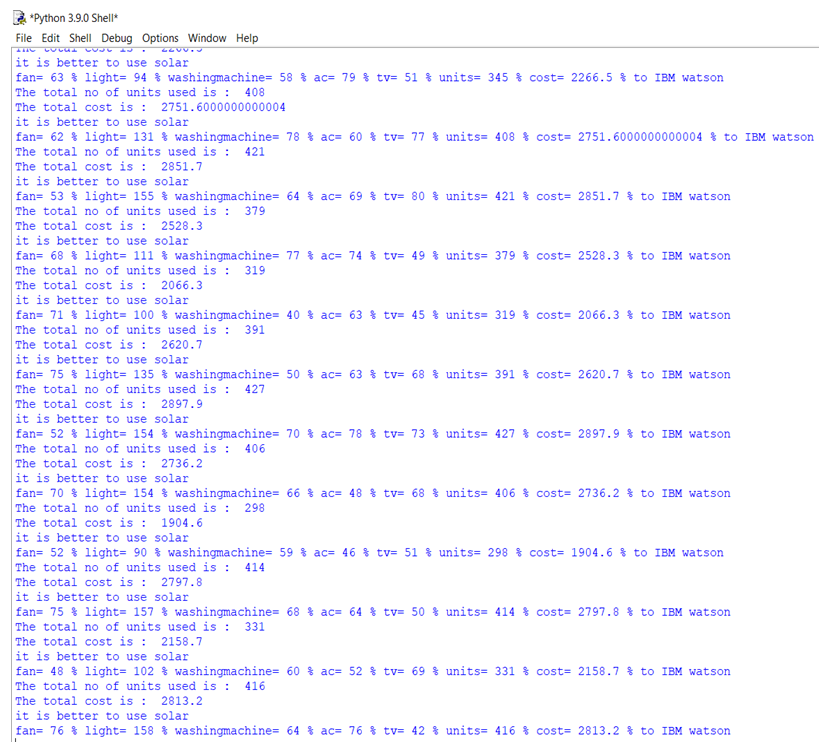

The Corona Virus Prediction project comprises two main features or methods we can say, first predicting and analyzing cumulative confirmed cases and then representing with visuals that are data visualization. The second one is predicting the growth of total, confirmed, and new cases and finding accuracy.

- PRESENT SYSTEM

Many employers are working on the same data and with the same idea of predicting the growth of the virus by analyzing cases. The COVID crisis has led many colleges and students to work in teams to get into a solution against corona.

There are many ongoing types of research and many projects have already been developed in predicting creating awareness on the same

- PROPOSED SYSTEM

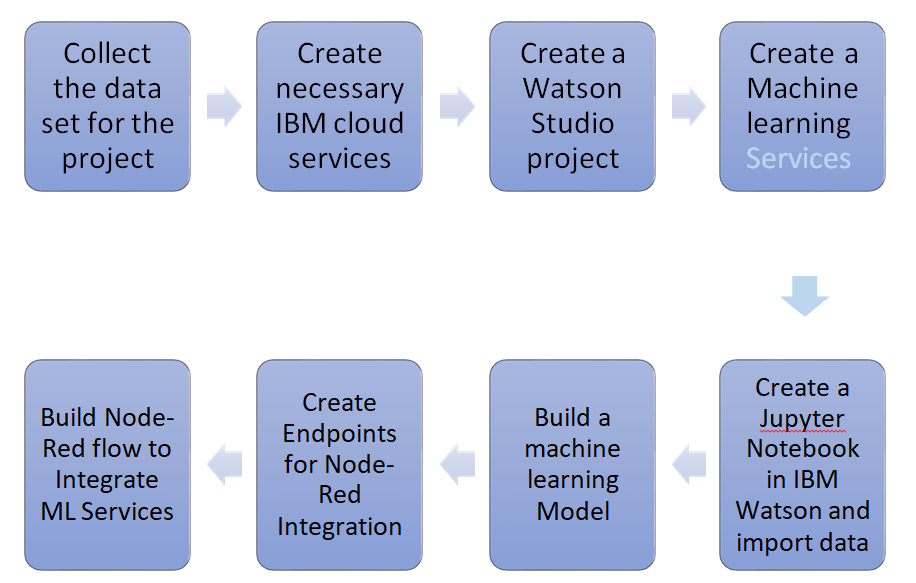

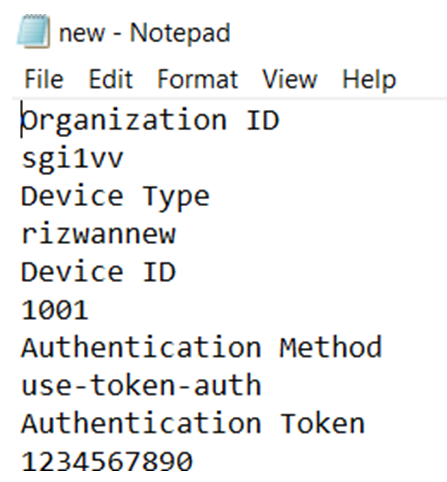

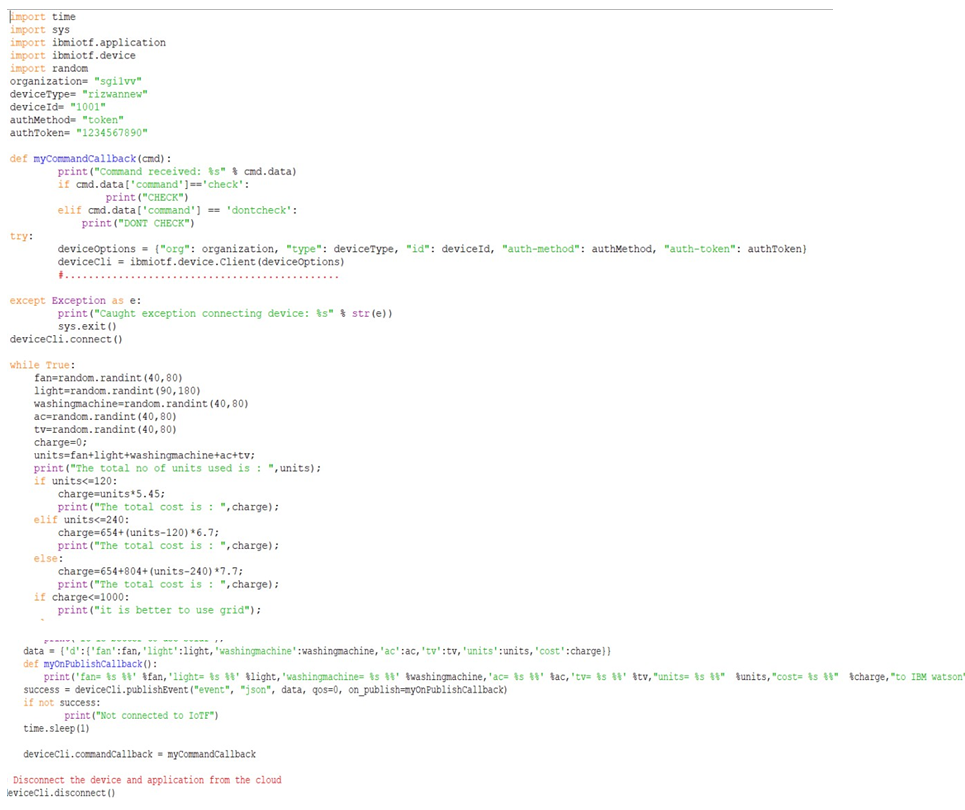

Working on the dataset led to various challenges such as modeling different algorithms of machine learning but finally worked on them in order to get the best result. It is an insight into the working brief of the project such as descriptive information about machine learning, algorithms, statistical description, and most important the programming language used here which is python.

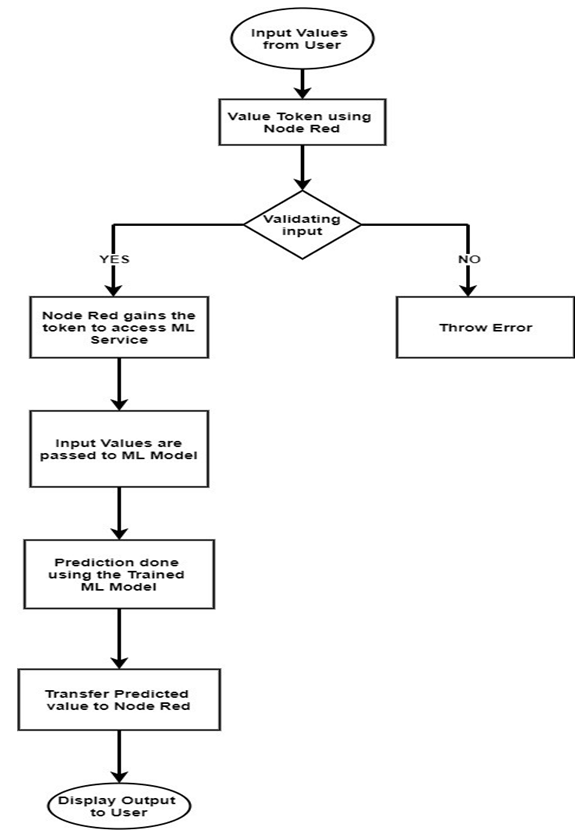

System Design

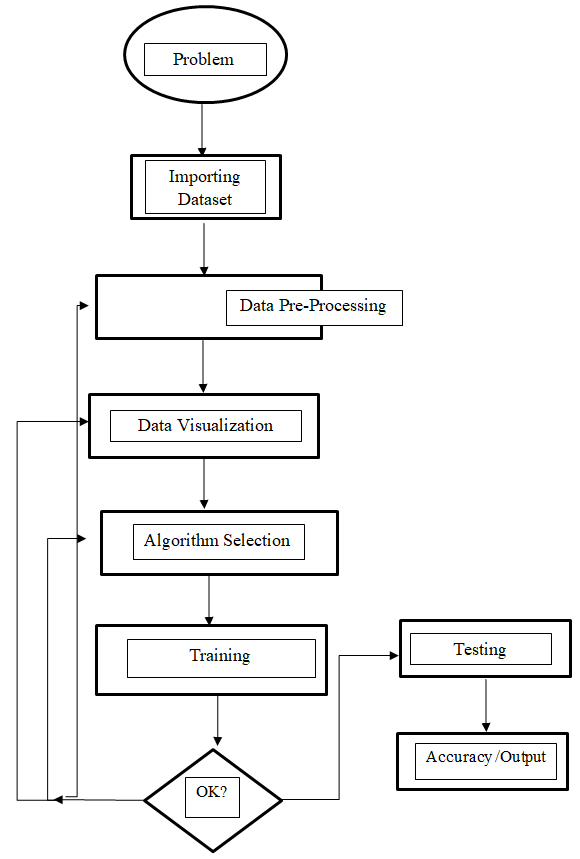

System Flow Chart

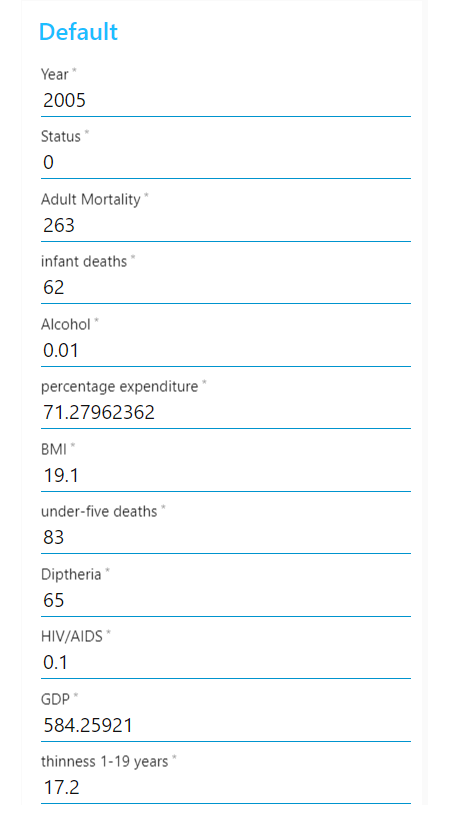

Data Dictionary

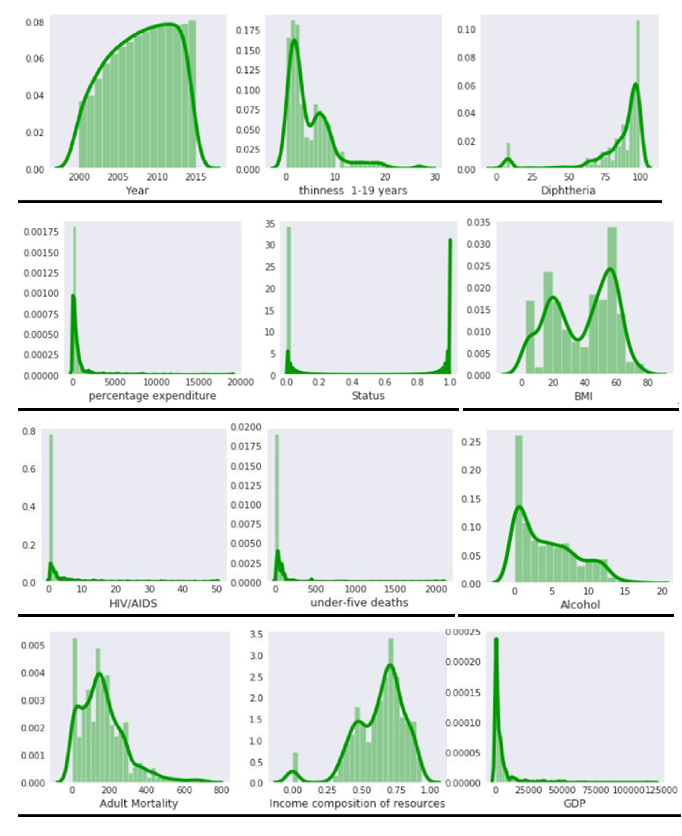

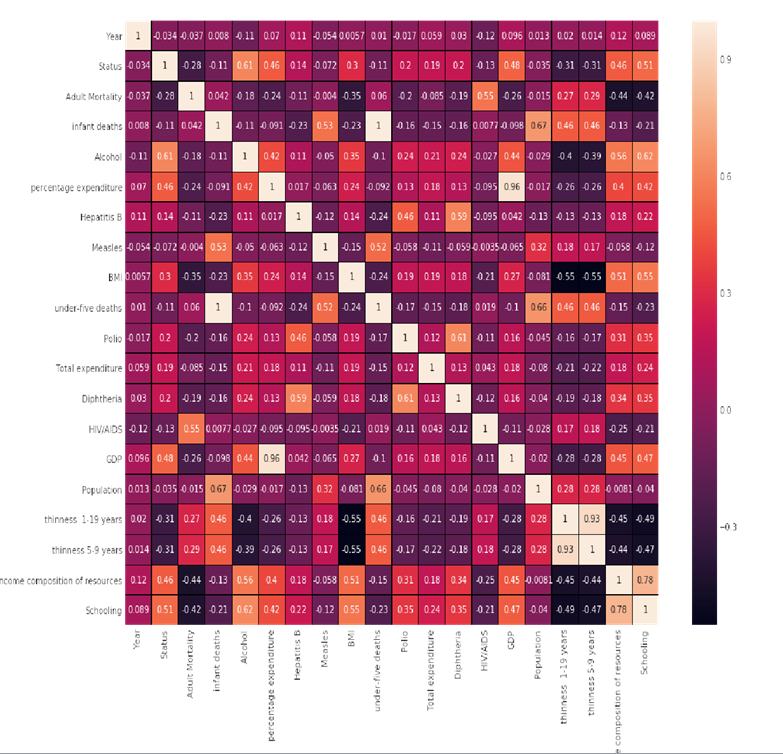

Data Pre-Processing: Our dataset needs to be pre-processed. Therefore, data pre-processing is required in this project.

Definition of Training Set: The training set is the data that the algorithm will learn from. Learning looks different depending on which algorithm you are using.

Algorithm Selection: Our project has been implemented using various algorithms such as linear regression, random forest, and decision trees.

Decision Tree: In python, we use a decision tree to observe and figure out the trained data in the structure of the tree in order for any future implementation. Decision Tree, here the target variables take continuous values called regression tree.

Implementation Work Details

Libraries used

Numpy

It contains among other things:

- a powerful N-dimensional array object

- broadcasting Functions

- Tools for integrating

- Useful linear algebra etc.

Pandas

Pandas is an open-source, BSD-authorized library giving superior, simple-to-utilize information structures and information investigation apparatuses for the Python programming language.

- Benefits:

Python has for some time been incredible for information munging and planning, however less so for information examination and display. pandas help fill this hole, empowering you to do your whole information examination work process in Python without changing to a more space-explicit language like R.

Joined with the amazing IPython toolbox and different libraries, the earth for doing information examination in Python exceeds expectations in execution, profitability, and the capacity to work together.

More work is as yet expected to make Python a top-notch measurable displaying condition.

Download the Complete Project on Prediction of the growth of Corona Virus Python Project Code and Report