Introduction

- The aim of creating this project is to bring every manual activity of the gym to the website or on the online platform.

- This helps in making work easy for the gym staff which is lengthy and a little bit complex because of doing it on paper.

- This website also helps the member of a gym, through this website the members can track their attendance manage their schedule, and many more things which we will discuss further.

- It also allows guest users to apply for Gym membership directly via the website.

- Trainers of the gym also can track their attendance and workout details of members via this website.

- Trainers can prepare workout schedules and diet charts for members via this website.

Entities:

- Admin

- Trainer

- Member

- Guest

Project Profile

Requirement Gathering:

ADMIN:

Admin is the one who manages the whole website and has every access right to the website. Admin can do the following things:-

- Admin can log in.

- Admin can add, update or remove Gym Details.

- Admin can manage the members and trainers of the Gym.

- Admin can manage the attendance of members and trainers.

- Admin can manage memberships.

- Admin can sell Gym products.

- Admin can provide fitness blogs and videos.

- Admin can manage payments.

- Admin can generate reports.

MEMBER:

Members are like clients of the Gym. Member can also access many things on a website like purchase products, view attendance, etc. member can do the following things:-

- Member can log in.

- Member can manage his/her profile.

- Member can track his/her attendance.

- Members can watch training videos and workout schedules and diet charts provided by Trainer.

- Members can buy Gym products.

- Members can manage payments for membership renewal.

- Members can provide feedback for the website and Gym.

TRAINER:

Trainers are like employees of the gym. Trainers will do things like managing the workout schedule and diet chart of members. A trainer can do the following things:-

- A trainer can log in.

- A trainer can manage his/her own profile.

- A trainer can view or track the attendance of members and his/her own.

- A trainer can manage users’ workout schedules and diet charts.

- Trainers can upload workout videos for users.

- Trainers can give reward points to members on the basis of their weekly performance.

GUEST

Guests can only serve or see the gym website, he/she can do anything only after registering for the gym and website.

• Guest users can view the website.

• Guest users can register/Apply for a Gym membership

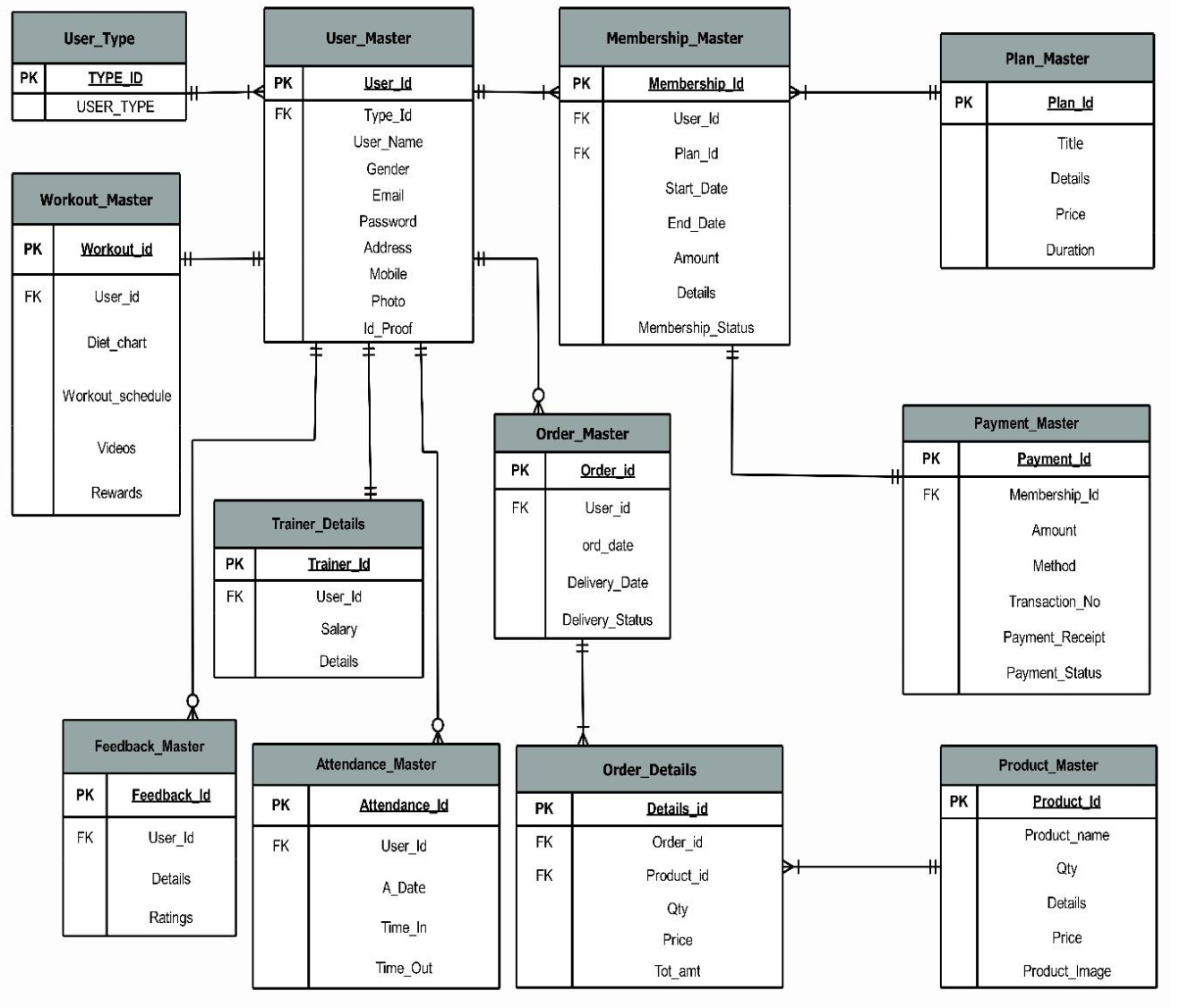

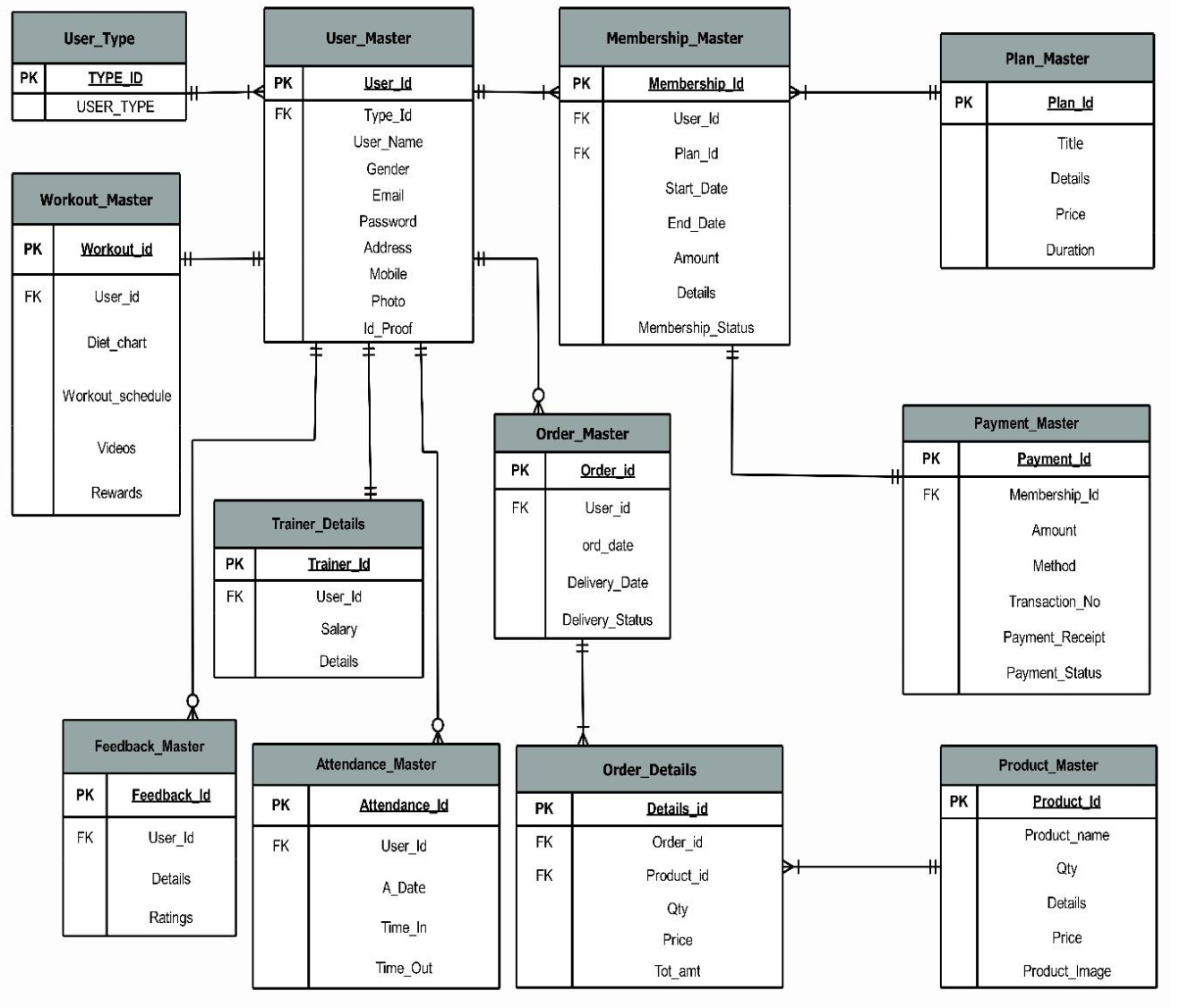

ER Diagram:

Existing System

- Customer data is stored manually either in registers or in MS Excel.

- Books are maintained to keep track of Customer attendance.

- Payment transactions are kept in books.

- Currently, the GYM does not have any advanced system to manage the GYM.

Proposed System

- In the new system trainers and members can track their attendance from anywhere.

- In the new system, a member can get his/her diet chart according to their workout plan.

- In the new system, members can get a workout schedule from the trainer, while they also get rewards for points for achieving workout targets.

- In this system, members can watch workout videos provided by their trainers which helps them to do exercise at home.

- Here members can also purchase gym products.

Tools And Technology Used

FrontEnd: HTML5, CSS 2.1, JS

Backend: Mysql 5.5

Framework: Django 3.1 (Python 3.8)

Other Tools: Microsoft Powerpoint 2019, EDraw max 9.0, Microsoft Visio 2016, and Microsoft Word 2019

Data Dictionary

1) Table Name: User_Type

Table Description: Contains details of user type. It will give information about the type of user whether it is a member, trainer, or admin in the User_Master table.

2) Table Name: User_Master

Table Description: Contains details about users. It will contain all the basic information about users like name, email, gender, address, mobile no. etc along with the type of user.

3) Table Name: Plan_Master

Table Description: Contains details about membership plans. It will contain all the basic details about plans that a member can choose for a gym membership.

4) Table Name: Membership_Master

Table Description: Contains details about members’ memberships. It will contain all information regarding memberships of members according to their chosen plan.

5) Table Name: Trainer Details

Table Description: Contains details about the trainer. It will contain additional details about trainers along with details in the User_Master Table.

6) Table Name: Payment_Master

Table Description: Contains details about payments. It will contain the payment details of Memberships of a member

7) Table Name: Product_Master.

Table Description: Contains details about Gym products. It will contain basic information about products that the admin wants to sell and that a member can buy.

8) Table Name: Feedback_Master

Table Description: Contains details about feedback. It will contain feedback details given by members about the Gym.

9) Table Name: Workout_Master

Table Description: Contains workout details of members. It will contain members’ workout details like diet charts, workout schedules, workout videos, and reward points provided by trainers.

10) Table Name: Order_Master

Table Description: Contains Order Details. It will contain basic order details like which member has made the order, date of placing an order, delivery date, etc. of orders made by members for their purchase of products

11) Table Name: Order_Details

Table Description: It contains order Details. It will contain additional information about orders like the product purchased, the quantity of the product, the Price of the Product, etc. in relation to the Order_Master table.

12) Table Name: Attendance_Master

Table Description: Contains details about the attendance of users. It will contain day-to-day attendance details of members and trainers which will be added by admin.

Modules Functionalities:

ADMIN SIDE:

- Login page for admin with validations. The email id Field Should not be empty. Email id should match the requested format which contains @ and (.)

- Admin not allowed to login due to invalid username.

- Change the Password page of Admin, the retyped password doesn’t match the validation Correct admin username and password:

- Home page of Admin:

- Admin dashboard. It Shows Side Panel which directs it to the selected page to be visited. The Page shows the direct link and information of User_type, Users, Trainer details, Attendance, and plans.

- Add User Type: Admin is adding user type member

- When the view tab of User type is clicked type of users is shown on this page

- Add Users window can be opened from the side panel and the Admin can add a new user.

- View Users window- All the users are shown here to the admin where the admin can take actions like edit and delete.

- When the view part of Plans in the side menu is clicked plan details are shown.

- Add Plans window-Admin can add new plan details in this window.

- Admin can add membership details of the user, here validation is showing where the amount field is required.

- When viewing a part of Membership Details in the side menu clicked Membership details table is shown

- Attendance adds window- In add attendance window admin can add attendance details of users.

- When view part of attendance in the side menu is clicked attendance details of users are shown

- Then add part of Trainer Details is clicked, Admin can add details of the trainer.

- View part of Trainer Details where Details of the trainer have been shown.

- Add payment window is open when adding part of Payment Details is clicked, Admin can approve Payment Status.

- When the view part of Payment details is clicked Payment Details is shown.

- Add product window is shown when clicking on the add part of the Products.

- When the view part of the Products tab is clicked all product details with price and quantity have been shown.

- Add Workout Details Window – The admin can add Workout Details of a particular user by adding a diet chart, workout schedule, and workout videos for the user.

- View the Workout Details window where all the details of a user’s workout(including diet chart and schedule) are shown.

- View Order window – All the details with delivery status are shown in this window.

- View Feedback window: Admin can view feedback and ratings given by users in this window.

TRAINER SIDE:

- Trainer Login Page:

- Trainer dashboard which contains information about trainers with Edit Profile and Change Password Link. It Shows Side Panel which directs it to the selected page to be visited. The Page shows the direct link and information on Attendance and Workout Details.

- When Change Password Link is clicked, the trainer will be redirected to the Change Password Page where he/she can change their login password:-

- Change Password Validations:-

- When the My Attendance part of Attendance in the side menu is clicked attendance details of his/her own are shown:-

- When the Members Attendance part of Attendance in the side menu is clicked attendance details of the member are shown:-

- Dashboard Showing Add and View Option in Workout Menu of Side Bar :

- Add Workout Details Window – The trainer can add Workout Details of a particular user by adding a diet chart, workout schedule, and workout videos for the member.

- View Workout Details Window: Details of member workouts including diet chart, workout schedule, and total reward points are shown in this window.

GUEST SIDE:

- HomePage:- The starting point of the website/first page of the website

- About Options:-

- About Us page giving information about GYM:-

- FAQ Page:- It Contains all the frequently asked questions with their answers

- Testimonial Page:- It contains all the reviews given by the members.

- Contact Us Page:- It contains all the contact details of the gym.

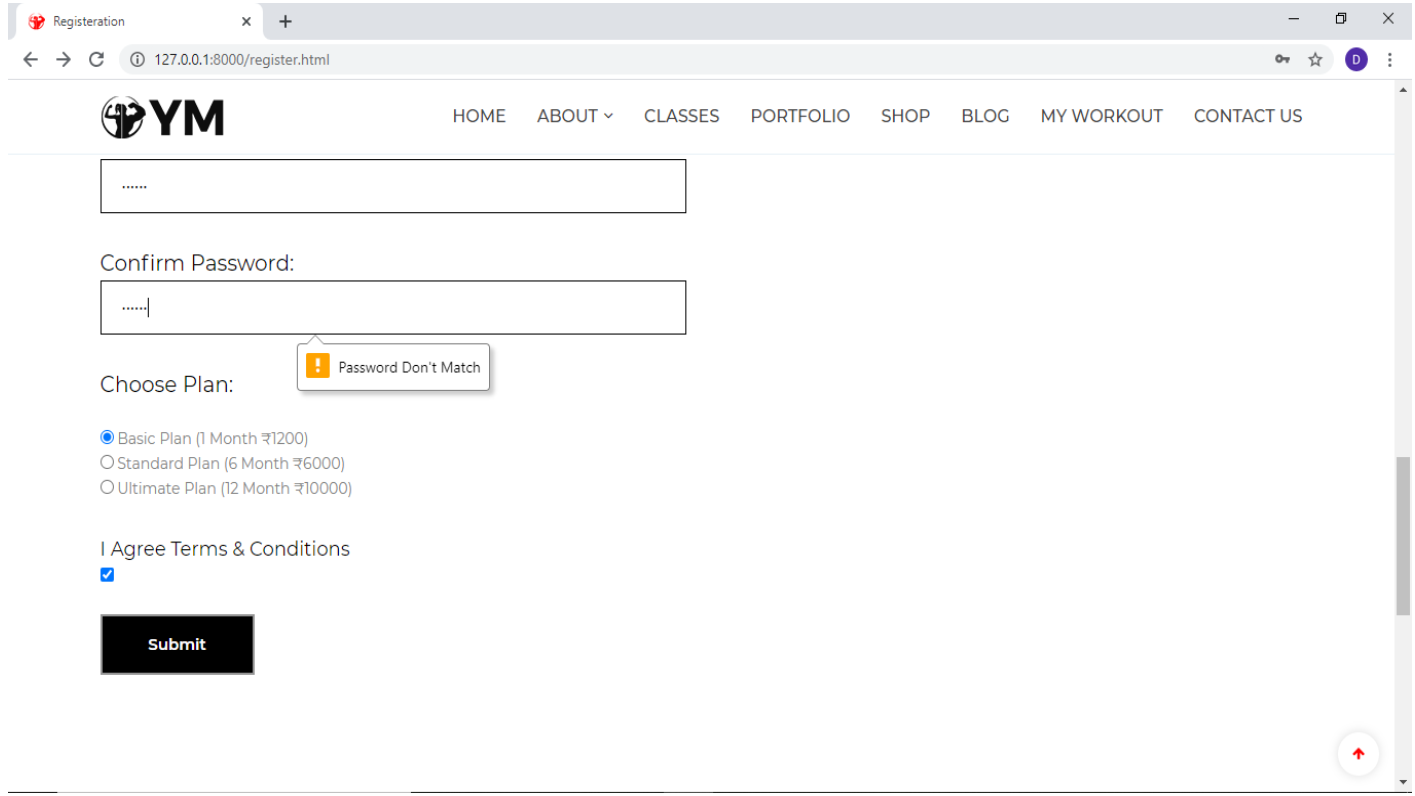

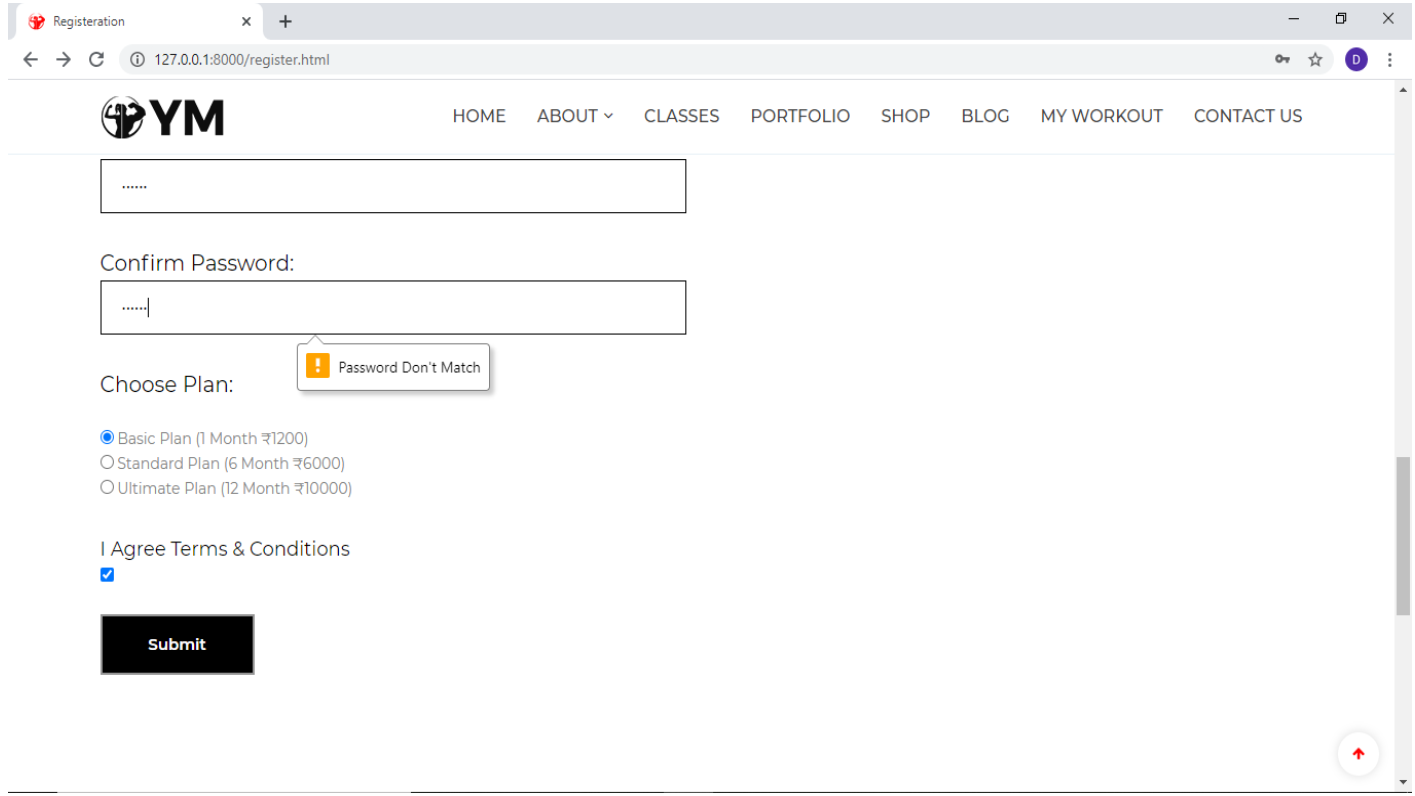

- When a user clicks on the Apply For Membership tab Registration page is opened which Collects user data for registration so that the user can make a login.

- Registration Page Showing How to Apply for the GYM Membership.

- Registration page displaying validation:-

- After Successfully filling Registration Form, the User will be redirected to the Payment Confirmation Form which will show the user information along with the plan he has chosen while registering then the user has to choose how payment was done, enter transaction no. and has to upload Payment Receipt.

- When User will successfully submit the payment confirmation form, they will be redirected to the Login page or can open it from Login Tab.

- Login Page with Validation:-

- Forgot password? – asking for a registered email ID

- Password received by the customer through email.

USER SIDE:

- After Successful Login User will be redirected to the Homepage. The Apply For Membership and Login heading is changed to My Account with Profile, Membership, Attendance, and Logout Options.

- When the User Clicks on Membership, He/she will be redirected to the membership page which contains the membership details the user.

- When the User Clicks on the Attendance option in the My Account Section he/she will be redirected to the Attendance Page which contains the attendance details of the user:-

- Shop Page:- It contains all the products with details that the gym wants to sell.

- Add to cart option on the product:-

- Shop Page showing Add to Cart Option for a product:-

- After clicking add to cart from Shop Page, Cart is opened which shows items in your cart.

- If the Customer wants to shop for more than one product, he/she can click on Buy More and add other products also.

- When the User clicks on Proceed to Checkout, the Checkout page is opened which shows order details and Billing details and gives the summary of your orders.

- After clicking Place order, the user is provided with the appropriate order placed message and view order option. On clicking view order user will be shown all the details of his/her orders.

- When the User Clicks on View Order, he/she will be redirected to My Orders Page which contains all the order details of orders made by the member.

- When the user Clicks on More details, he/she will be redirected to the order details page which contains additional details about the order.

- My Workout Page:- It will give the user his/her option to download his/her diet chart, workout schedule, and workout videos provided by the trainer

- Blog Page:- It contains all the fitness blogs that users can read.

- Homepage showing My Account Section having Options Profile, Membership, Attendance, Logout:-

- On clicking the Profile Option in the My Account Section, the User will be redirected to the My Account Page which contains all details of the currently Logged In User like name, address, gender, email, mobile, etc. with the Edit Profile/Change Password Option.

- When the User Clicks on Change Password, he/she will be redirected to the Change Password Page where the user can change his/her old password new password.

Change password Validations:-

- Showing Logout Option In My Account Menu:-

- When the User Clicks on Logout, he/she will be redirected to Login Page.

- Report of all the users registered with Dynamo Fitness.

- Various Filters for user reports like reports based on user type, i.e. members or trainers, and reports based on gender.

- Report after using the user type and gender filter it will show only gym members who are female as we used the user type filter as members and gender filter as female.

- Report on Current plan and membership of the members it displays the name and plan type of members.

- Membership report using start date filter for plans starting date.

- The report shows the list of members whose memberships start in a selected month.

- Filter based on plan title i.e. basic, standard, and ultimate plan.

- list of members who are registered with the standard plan.

- Report after using the print option, the report shows the member with their specific plan with a start date and end date of the plan.

- Report for the feedback given by users with filters that are gender and ratings.

- Report using a rating filter, it will display users with specific ratings.

- Report showing list of users given rating 9.

- Feedback report after selecting the print option.

- PDF view of feedback report using the view pdf option.

- Product order report showing user id with the product they ordered

- A report showing a filter of product names with different products available.

- Reports after applying the product name filter i.e. dumbells will show the product id and user id of the users who ordered them.

- Report after using a filter with the Delivery status it displays the product which is delivered.

- The attendance report shows the attendance of users that are members and trainers on day to day basis.

- The filter of the Attendance report is based on the user type i.e. Member and Trainer.

- The attendance report on the base of the trainer filter displays only trainer attendance.

- Report after selecting the print option.

- Date filter option for a report which shows the attendance of users of a specific date.

- Report After Filter By Attendance Date and Gender

CONCLUSION

The entire duration of this project has been a great learning experience for us. It has introduced us to the working of real-life projects and taught us to face obstacles while developing them. By developing this web application, we hereby conclude that at Gym Management we have achieved our aim at the following:

1) Building a platform where people can apply for a GYM Membership at any place and start their workout activities even at Home.

2) We believe that this website has made it easier for the GYM Owner to manage the information regarding different aspects of the Gym.

3) This website has also made it easier for trainers to manage the workout activities of members. We also hope to expand the scale of the project and make it ubiquitous by developing it for all digital platforms.

Download the complete project on Gym Fitness Management System Project using Python, MySQL, and Django Framework.