Agenda:

- Abstract

- Existing system

- Drawbacks of existing system

- Proposed system

- System requirements

Abstract:

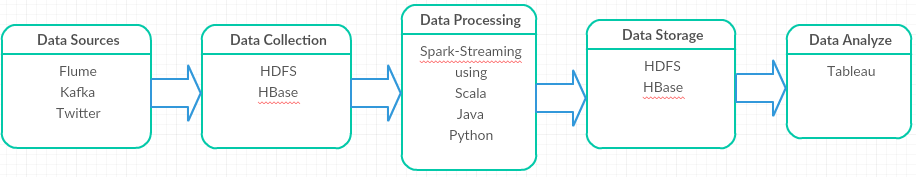

- In this project, Spark Streaming is developed as part of Apache Spark.

- Spark Streaming is used to analyze streaming data and batch data.

- It can read data from HDFS, Flume, Kafka, Twitter, process the data using Scala, Java or python and analyze the data based on the scenario.

- This basically implements the Streaming Data Analysis for DataError extraction, Analyse the type of errors.

Existing System:

- Apache storm is an open source engine which can process data in real-time.

- Distributed architecture.

- Written predominantly in Clojure and Java programming languages.

- Stream processing.

- It processes one incoming event at a time.

Drawbacks of Existing System:

- One at a time processing

- Higher network latency

Total Time=10*(network latency + server latency + network latency)=

20*(network latency ) + 10*(server latency)

- “At least once” delivery semantics.

- Less fault tolerance

- Duplicate data

Proposed System and Advantages:

- Micro-batch processing.

- Low network latency

- Total time=network latency + 10* server latency +network latency =2*network latency + 10*server latency

- “Exactly once” delivery semantics.

- High fault tolerance

- No duplicate data

Methodology:

- Let us consider different types of logs and store in one host.

- This creates a large number of log files and processes the useful information from these logs which is required for monitoring purposes.

- Using Flume it sends these logs to another host where it needs to be processed.

- The solution providing for streaming real-time log data is to extract the error logs.

- It provides a file which contains the keywords of error types for error identification in the spark processing logic.

- Processing logic is written in spark-scala or spark-java and store in HDFS/HBase for tracking purposes.

- It uses Flume for sending the streaming data into another port Spark-streaming to receive the data from the port and check the logs which contain error information, extract those logs and store into HDFS or HBase.

- On the Stored error data, it categorizes the errors using Tableau Visualisation.

Architecture & Flow:

System Requirements:

- Java

- Hadoop environment

- Apache Spark

- Apache Flume

- Tableau Software

- 8 GB RAM

- 64- bit processor

How can i get this project detailed explanation along with code??

Please mail me the code along with docs

Can you give me this product details??

Please share the project code.

please can i take the code

please share the codes and complete project